Introduction

With a shift towards modern development practices, services architecture has become a goal for many of our heavily regulated and security conscious clients. As the shift towards cloud computing increases many clients are striving to understand the challenges and opportunities that a Serverless approach provides while defining what a scaled cloud implementation means for their organisation.

What Is Serverless Architecture?

Simply put, Serverless architecture is a way to build software that will run on a server fully managed by a cloud provider instead of a server operated by your organisation.

To get a bit more background on what Serverless is before continuing, please refer to our ‘Introduction to Serverless Architecture’ blog.

Enterprise Challenges

Of the Serverless challenges mentioned in ‘Introduction to Serverless Architecture’, vendor lock-in and diminished control are common blockers for enterprises across most industries.

Vendor lock-in is difficult to mitigate for heavily regulated organisations such as banks and governments in some regions that have a requirement to be independent of any single cloud provider. In this situation, a more appropriate cloud strategy would be containers, which can be deployed consistently to any of the hyperscale cloud providers.

Diminished control over the configuration of fully managed services can be incompatible with enterprise security and compliance policies if they have not been modernised for the cloud. For example, an organisational requirement for a particular operating system type and version cannot be complied with because you are unable to pick the operating system in services that the cloud provider fully manages. Similarly, meeting Service Level Agreement (SLA) requirements may also be challenging as the SLAs are provided by the cloud provider with limited ability to expand or change them within the service configuration or application scope.

The most significant challenge for enterprises looking to adopt Serverless is how they typically manage their cloud environments and the development and deployment of applications to the cloud across their organisation. This applies especially to the finance industry where strict regulation makes it harder to make significant changes to how technology concerns are managed. As a result, many financial organisations are forced to take a more traditional IT approach of having separate teams for provisioning, application and operations, and strict limits on what each team can do in the cloud. This approach is more compatible with their existing IT policies, and it can certainly work when using virtual servers in the cloud. However, with Serverless, this approach is detrimental to productivity.

In this regard, Serverless architecture differs from server-based in that a given solution will consist of many different cloud resources – significantly more than a typical server-based solution. For example, a legacy application might run on a single server with one or two additional servers for redundancy and scaling. A similar Serverless solution might consist of 30 or more microservices integrated with several managed cloud services such as queuing, notifications, storage and more. Secondly, resource configuration and application code are far more intertwined with Serverless – the configuration and associated service often replacing what would be code in a server-based solution. Due to this nature, such configurations should be part of the same code base to be modified, tested and deployed together.

To provision a server, you need to know the size, storage capacity, and required permissions and connectivity. To provision the resources required for Serverless architecture, you need to determine multiple microservice configurations, multiple service configurations, their individual permissions and how they all integrate. It is not easy to scope these requirements in detail without actually building the solution in an iterative approach – especially for teams less experienced in Serverless architecture. Without the ability to provision resources themselves, application teams sometimes resort to using personal and other external Amazon Web Services (AWS) accounts to build an initial version, or they need to constantly raise provision and infrastructure change requests to the provisioning team. This approach poses significant security and productivity challenges.

For such enterprises to make Serverless productive and available at an organisational level, they will need first to evolve how they manage their cloud environments.

Evolve How Cloud Is Managed Before Adopting Serverless

To adopt Serverless, enterprises in any industry need to evolve their cloud approach to enable the application teams to provision, configure and update approved cloud resources themselves – at least within development environments. Providing application teams with controlled access to the cloud can be achieved using ‘cloud controls’. In this cloud strategy, a combination of service whitelisting and security controls are put in place so that the application team has the freedom to experiment, provision resources and create proof-of-concepts while being kept within defined guardrails. Such controls can also enforce configuration rules such as requiring a firewall in front of APIs, disabling specific configuration options in services, requiring encryption, disabling public internet connectivity, and much more.

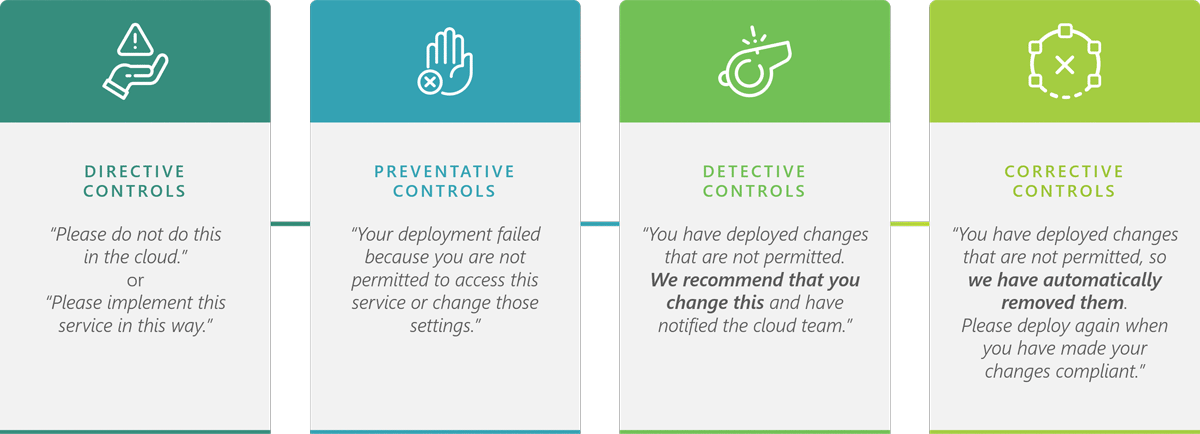

Cloud security controls comprise of four different methods of securely managing a cloud account. The security team will typically implement these controls via a master organisational account to all department or project accounts. All well-managed cloud accounts should have these implemented to avoid security gaps and inconsistencies across an organisation’s cloud accounts. Organisations can implement controls with a mix of existing cloud services and custom microservices or containers for more complex organisation-specific rules.

Directive Controls

Directive controls are typically written in best practices documentation and provided as guidance to developers working with the cloud account. They place a certain level of trust in the developers to follow the provided directions and best practices, and configure the cloud infrastructure correctly.

Preventive Controls

Preventive controls are hard stops to prevent developers from taking specific actions. These could include not permitting the use of overseas regions, for example. The service limits in AWS could be considered preventive controls, and Organisation Service Control Policies (SCP) can use whitelists to permit access only to approved services within the account.

Detective Controls

Detective controls monitor all changes in a cloud account after the fact for anything not meeting the defined rules. When non-compliance is detected, a notification can be sent to someone to track the situation and follow-up if needed. No direct action is taken by the control itself other than to log the event and optionally send the notification. This allows teams the freedom to experiment in the cloud while still tracking and reporting things that don’t meet the rules. AWS Config, Macie and GuardDuty are services that can be used to implement detective controls and monitor for situations such as S3 buckets being publicly available, APIs being deployed without a Firewall or access keys being stored in an S3 bucket. A detective control in CloudFormation makes it possible to detect unauthorised manual changes known as ‘drift’, to the infrastructure configuration.

Corrective Controls

Corrective controls are similar to detective controls in that they monitor after the fact for any activity that is not permitted. However, unlike detective controls, these controls will automatically change or undo the disallowed activity. While a development environment might prefer detective controls, a production environment will likely use corrective controls. These controls can be implemented as an extra step on the detective controls. Instead of sending a notification to a user, they can notify a Lambda microservice to perform an automated action, for example.

Detective and corrective controls can run on a schedule or automatically after any change to the cloud environment to detect or correct non-compliance instantly.

When to Use Which Control Type?

Which controls to use is closely linked to the type of environment and the maturity of the organisation.

- Production environments will typically use more preventative and corrective controls.

- Development environments can be more flexible with descriptive and detective controls.

Less mature organisations and those in heavily regulated industries tend to have a lower risk appetite and favour preventative and corrective controls even in development environments.

For Serverless to be productive, the organisation should implement predominantly descriptive and detective controls, at least in the development environment.

You can learn about this in more detail in the Sourced Cloud Deployment Patterns whitepaper.

Policy Challenges

For financial organisations that have transitioned to a controls approach, there may still be policy hurdles to resolve in using Serverless services. Hard requirements on specific configuration settings or operating systems remain prevalent in the industry. However, whether these rules are from government regulators or self-imposed is not always clear.

In contrast to the more traditional banks, we see that younger banks and organisations in the same industry seem to have considerably more freedom in the cloud, in some cases leap-frogging to Serverless architecture and skipping servers entirely.

The following industry use case for ‘Building a Serverless Platform’ has been taken from the Serverless Chats one-hour interview with NorthOne bank’s technical lead Patrick Strzelec.

NorthOne bank is a deposit account bank for small businesses. Their core banking system is entirely Serverless. The initial pre-launch system was developed with just four developers, and today they run the entire platform with a team of 25. Onboarding has proven to be considerably easier and faster too, where a developer who was new to their team design, was able to develop and launch a production service in only a month.

The full video can be found here, and it’s definitely worth the watch to see what is possible when IT policies are evolved for the cloud.

Conclusion

There are many benefits of using a Serverless and microservices approach to applications in the cloud. Especially from a cost-savings and application team productivity perspective when applied to the right type of application. For Serverless to work for an enterprise, the approach to managing the cloud needs to be suitable. For heavily regulated organisations such as those in the finance industry, the policy changes required for a more flexible approach to cloud that is compatible with Serverless may be slow to arrive, often making this strategy a long-term goal to strive for. However, younger organisations in the finance industry are steadily proving that it is possible if an effort is made to modernise policies and embrace the next generation capabilities of the cloud.

Thomas is a Lead Consultant at Sourced with over 18 years of experience in delivering digital products. He gained a passion for evangelising Serverless architecture through his enthusiasm for cloud and entry into the AWS Ambassador programme.