Introduction

Is ‘Serverless’ just a new buzzword or is it a way to considerably reduce cloud operational cost for suitable applications? In this blog post, we provide an introduction to Serverless to help you determine whether it is relevant to your organisation.

If you prefer to watch a video format of this blogpost, you can now watch it here:

Watch the videoWhat Is Serverless Architecture?

Simply put, Serverless architecture is a way to build software that will run on a server fully managed by a cloud provider instead of a server operated by your organisation.

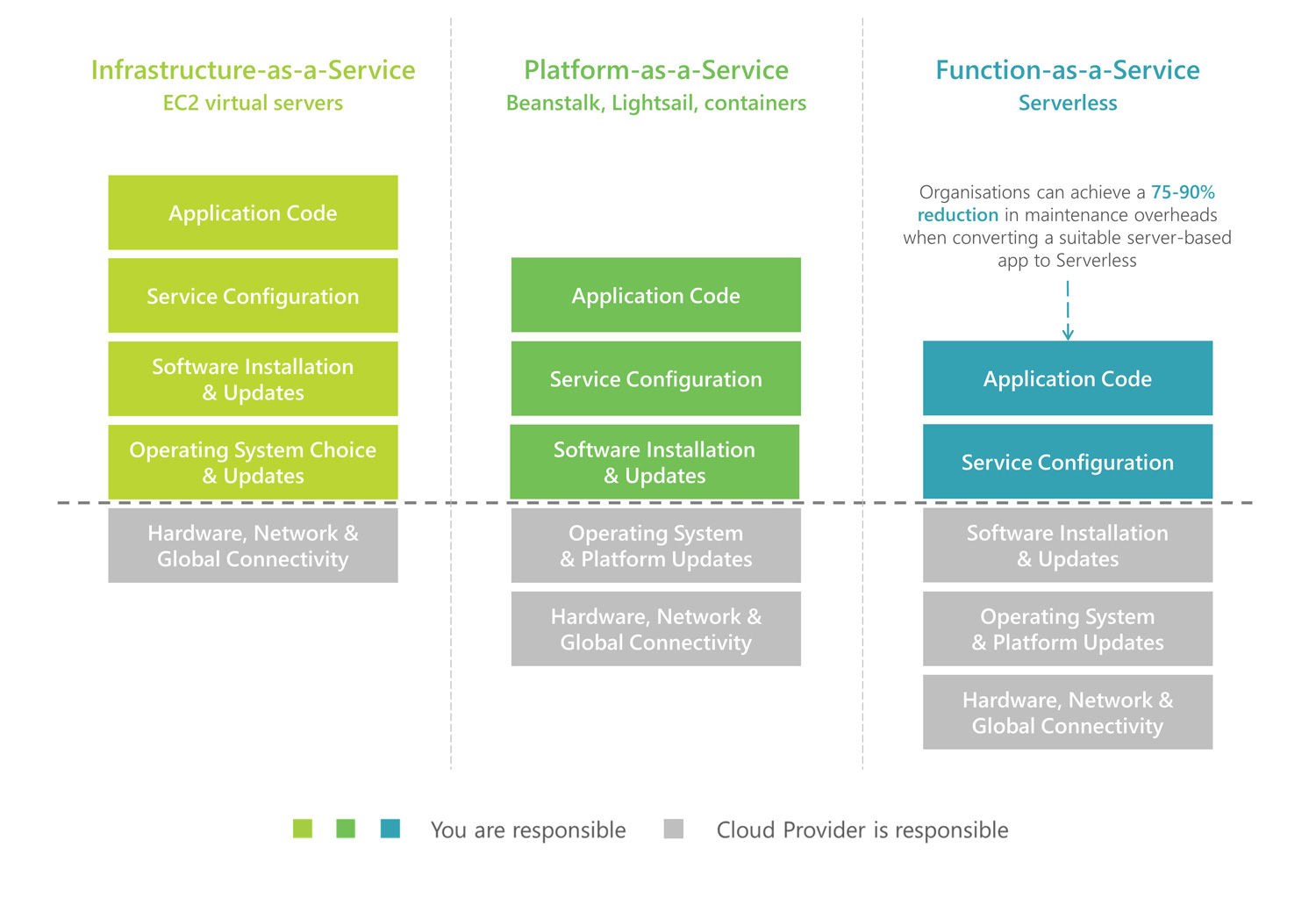

The word ‘Serverless’ is a bit of a misnomer, as servers are involved in storing and running your code. It is called ‘Serverless’ because the organisation’s application teams no longer need to manage, update or maintain the underlying servers, operating systems or software.

Redundancy, load-balancing, networking and, to some extent, security is also largely managed, maintained, and monitored by the cloud provider’s dedicated 24/7 operations team.

With Serverless technologies, the application team’s focus will be entirely on the cloud configuration – not to be underestimated – and the application code. In short, the activities that result in a Return on Investment (ROI) instead of the underlying ‘plumbing’.

Cloud-Native vs Fully-Managed vs Serverless

These terms are all related but not synonymous.

‘Cloud-native’ refers to applications that have been designed for the cloud. This could be Serverless, but it could just as easily include servers with cloud-specific features such as autoscaling.

‘Fully-managed’ refers to services in the cloud where the cloud provider manages the operating system and software. This includes many of the database services in the cloud. These services are deployed on servers and are billed based on time, but the organisation does not need to maintain the server, operating system, or software.

‘Serverless’ refers to services in the cloud that are fully managed and billed based on actual utilisation. Idle time is not billed, which is the main difference between Serverless and fully managed.

Microservices

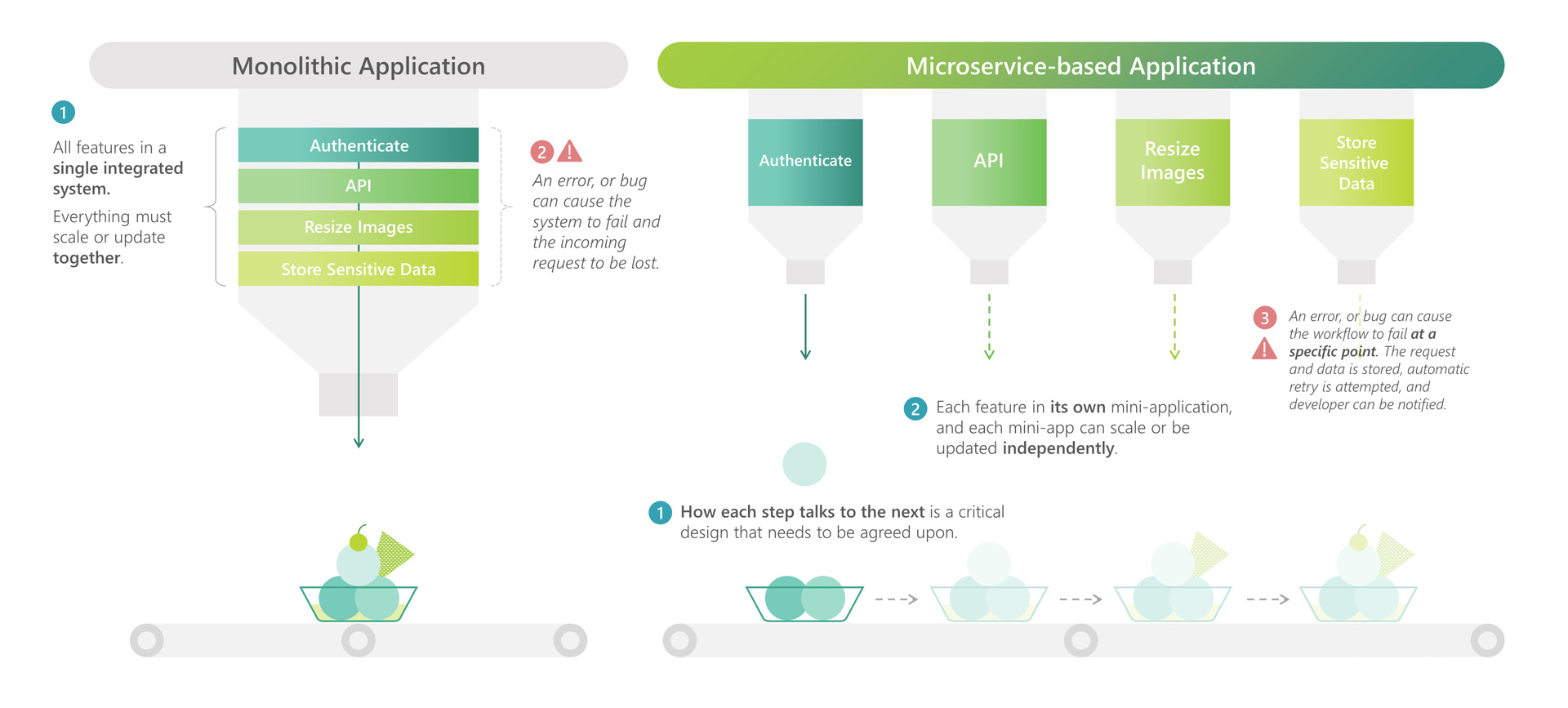

Traditionally, software architecture was monolithic, meaning that the application was written as a single collection of code that contained all its functions. While well-written monolithic applications are often layered to provide some separation between data, function and design, the different layers and the functions within a given layer are still heavily dependent on each other.

Microservices are the opposite of that. They can be thought of as independent mini-applications that handle a specific feature or set of closely related features. Multiple microservices will work together to deliver all of the required features for an entire solution. While microservices can work together, each microservice can fulfil its purpose without depending on other microservices or relying on any shared code.

Microservices can be upgraded and scaled independently, and a request can often automatically recover from errors. For example, if a monolithic application running on a server fails, the entire request is lost. Developers might only find out that the request failed when a user complains about it. If a microservice fails, then the request is still handled up to that particular microservice, and all data is retained. Automatic retries can be implemented and notifications sent to developers if manual intervention is needed. Once the issue is resolved, the request can continue where it left off and complete as intended.

Microservices frequently come up when Serverless is discussed, and they are a typical design pattern for Serverless. However, not all Serverless solutions use microservices, nor does a solution have to be Serverless to use microservices.

Key Benefits of Serverless

Near-Zero Wastage

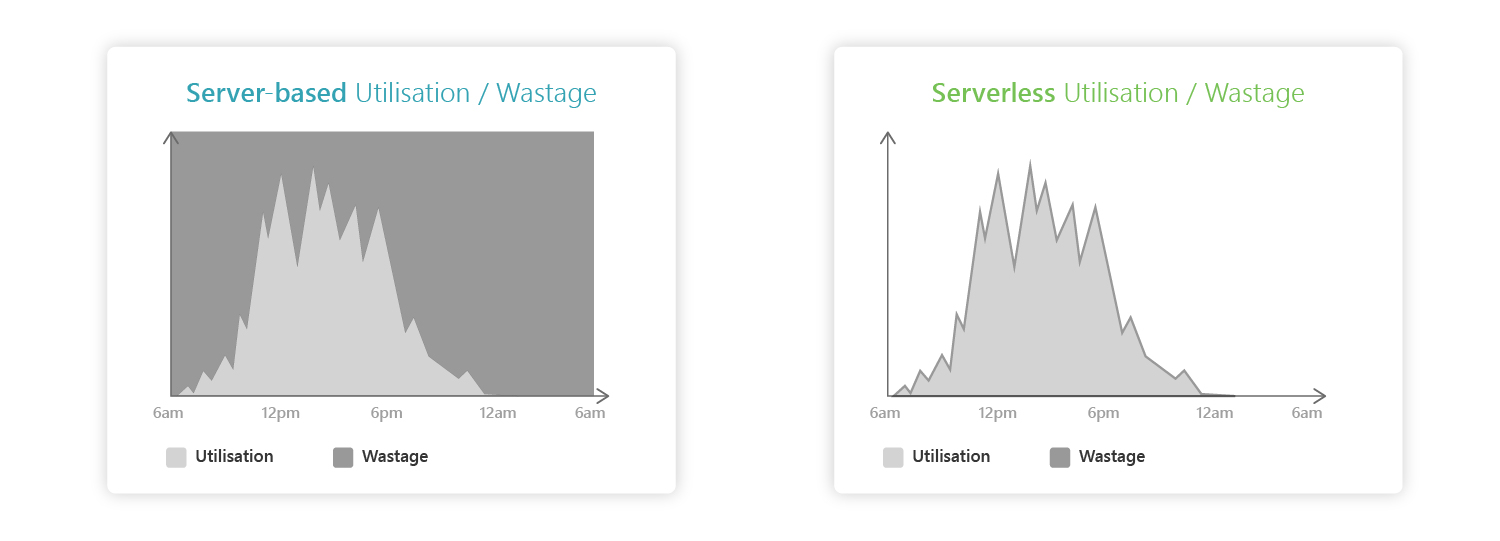

A significant benefit of Serverless is that you pay only for what you use, not for idle time or under-utilisation. Even during peak usage, servers should never reach 100% utilisation as this would mean that the server is sized too small. It is essential to have some resource margin in place to prevent the application from slowing down or crashing in a server-based solution. Typically, based on industry experience, the utilisation of a server tends to be around 40-50% non-peak and 70% at peak. Taken over a full month, the wastage is usually more than 50%.

This is not just about wasting money – consider the environmental impact of all those servers running and consuming electricity. With Serverless, a thin idle margin is fully managed by the cloud provider to follow your utilisation patterns. This margin will be less than a few per cent each month.

Highly Reusable Microservices

Microservices are developed with a particular purpose in mind. For the more generic microservices, this is likely a purpose that is relevant to many different solutions. Think ‘app store’ instead of ‘project functions’. Every microservice you develop across your solutions is another app added to your internal store that you can reuse in future projects, which can significantly reduce the development effort for new solutions.

Microservices can also be pre-approved for use within an organisation, providing developers with a ready-to-use feature for new applications that requires only a minimum-security review before being deployed if compliance within the organisation is aligned to cloud capabilities.

Better Access Security

In a monolithic application, the application and server can access all the tables and values in a given database. Every developer working on the application will usually have access to the entire code base and, by extension, all related services, and databases.

However, with Serverless, specific permissions can be granted and defined at the table, row and attribute level within a database. This can give you fine grained control allowing microservices exclusive or subset access to sensitive data. The same approach to permissions can be applied to developers with access to cloud accounts. For example, access can be granted to only the services and microservice code required for their development tasks that sprint.

Agility and DevOps Easier to Implement

Serverless, with microservices as an architecture choice, will push the team to become more agile due to its structure. Each microservice should be tight in its scope, with between one and five user stories typically applied to create one microservice. The structure allows individual microservices to be independently developed, tested, and used during a sprint. This, in turn, means that multiple microservices can be developed simultaneously by different teams. The same concept can be applied to front-end and back-end development of an application.

All Serverless services support automated deployments that are firmly integrated with DevOps services and capabilities. Cloud providers have end-to-end DevOps services that can be integrated with a team’s workflows and any services needed within a solution. This allows for automated deployment with testing and security controls that greatly reduce the chance of errors.

Significantly Lower Maintenance Cost

Server-based solutions require monthly maintenance to test, patch and upgrade operating systems and software. Any issues caused by the upgrades or situations, such as drives filling up with logs are also part of maintenance. All this maintenance effort goes away with Serverless solutions because these operational concerns become the cloud provider’s responsibility.

Key Challenges

Vendor Lock-in

In a Serverless solution, you use a whole range of provider-managed services. Many of them are specific and proprietary to the provider with limited, if any, compatibility with other providers. It is possible to make a cloud-agnostic solution that can run on different clouds, but to achieve this, it needs to be built for the lowest common denominator. As such, it will likely not be very cost-effective, and you will miss out on many features and services that are available on more advanced cloud platforms. Serverless is not suitable for organisations with a hard requirement for multi-cloud applications; a more suitable solution would be containers on Kubernetes, for example.

Less Control

With Serverless, you build on top of managed services, so, many of the underlying configurations ordinarily accessible to the developers are no longer available. For example, internal or regulatory policies requiring a specific server configuration may be challenging or even impossible to do with Serverless. You may need to assess and change such policies before you choose Serverless as the solution. Enterprises still often want the IP address of cloud applications. While these exist in Serverless, they are entirely managed by the cloud provider and could – and frequently do – change at any time without warning.

Service Level Agreements (SLAs)

SLAs will mostly be outside your area of influence. The cloud provider will provide an SLA for the services, and, typically, you will not be able to guarantee much more than that to your users. Some very new services might not even have an extensive SLA yet. If your users expect certain SLA guarantees, you may not be able to deliver on those in a Serverless solution if the provider has not included them in their SLA. Any existing SLAs you may have should be reviewed, and it should not be assumed that they can apply to a new Serverless solution without change.

Latency

Serverless will generally have more latency per request than a sufficiently sized server due to the latency adding up for each request between different services and microservices. A ‘cold’ start up – when a microservice is requested that has not been used for more than 30 minutes – will have more latency than a warm one. This means less-used solutions seem slower in comparison to those with many active requests. Placing Lambda microservices in a Virtual Private Cloud (VPC) to interact with servers or relational databases will add latency to their start time. This latency makes it crucial to produce highly optimised code and optimise any requests to other services such as databases that need to be made from the microservice.

Continue Learning

You can get a more in-depth introduction to Serverless in Thomas’ book ‘Serverless – Beyond the Buzzword’ at the link below. The book covers finance, people, strategy and technology and is suitable for anyone interested in learning more about Serverless.

Read more at ServerlessBook.co

Read moreThomas is a Lead Consultant at Sourced with over 18 years of experience in delivering digital products. He gained a passion for evangelising Serverless architecture through his enthusiasm for cloud and entry into the AWS Ambassador programme.