Introduction

Heavily regulated enterprises involved with sensitive commercial and customer data often treat public cloud services as low-trust and enforce inspection, filtering and data loss prevention on data transfer to services such as S3. This introduces complexity and performance overheads for development teams looking to build applications in AWS that rely on S3 for storage.

AWS has released a number of features to support private access to S3, including VPC Endpoints and a variety of directives and conditions for bucket policies. There is also a well-defined pattern for private connectivity to S3 from corporate data centres and a blog post on how to add domain whitelisting for Internet-bound traffic from inside a VPC using Squid proxies. In this article, I will explore how these solutions can work together to enable private and secure data transfers from on-premises data centres to S3 over a DirectConnect link or a trusted VPN connection.

Solution Overview

The solution enables access from corporate data centres to a set of approved S3 buckets, allowing teams to privately and securely upload data to S3.

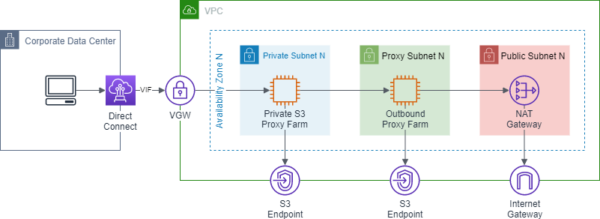

The diagram below describes a network zone design for a VPC used for transferring data communicating with S3 VPC Endpoints. Working from the Internet Gateway inwards:

- The Public Subnet layer contains NAT gateways for routing traffic to an Internet Gateway

- The Proxy Subnet contains outbound proxies for filtering outbound traffic from private instances in AWS

- The Private Subnet contains private proxies that only allow access to the regional S3 endpoint and uses upstream outbound proxies for S3 data transfers outside of the current region.

The Private S3 Proxy Layer

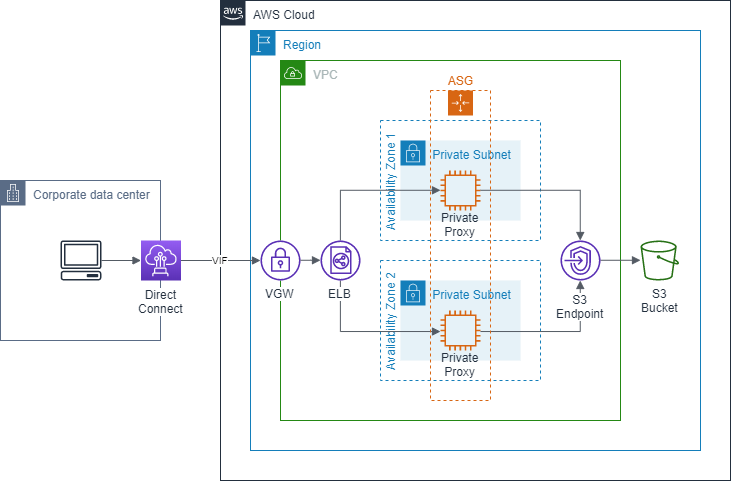

The underlying architecture for the private S3 proxies farm consists of:

- An auto-scaling group spanning across multiple Availability Zones able to scale to meet utilisation requirements with scaling metrics

- A hardened AMI with all the binaries and configuration files embedded to ensure failed instances are replaced in minimal time

- A load-balancer with health checks for the auto-scaling group instances to ensure maximum uptime for served traffic

- A set of Route 53 records are used for DNS resolution and to enable blue-green re-deployment of the stateless proxies

S3 Bucket Policies

S3 supports the creation of bucket policies with object-level controls that restrict access exclusively from designated VPC Endpoints.

Note:

- Take care when restricting bucket actions to the VPC Endpoints. If locked out, use the account’s root user as a break-glass procedure to reset the bucket policy.

- Additional condition keys should be used for securing data at rest and in transit.

- Block Public Access settings limit public access to S3 resources.

Resources:

StorageBucket:

Type: AWS::S3::Bucket

...

StorageBucketPolicy:

Type: AWS::S3::BucketPolicy

Properties:

Bucket: !Ref StorageBucket

PolicyDocument:

Version: "2012-10-17"

Statement:

- Sid: S3-Endpoint-Object-Access

Effect: Deny

Principal: "*"

Action: "s3:*"

Resource: !Sub "arn:aws:s3:::${StorageBucket}/*" # Object ARN

Condition:

StringNotEquals:

"aws:sourceVpce":

- "vpce-1a2b3c4d"

- "vpce-2b3c4d5e"Figure 3: Example bucket policy for restricted object access

S3 VPC Endpoints and Endpoint Policies

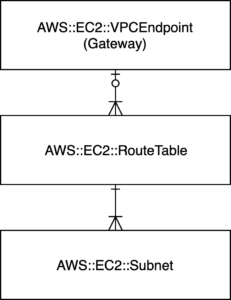

Some AWS services can be accessed privately through VPC Endpoints. In the case of S3, it can be accessed through gateway VPC Endpoints that are exposed through VPC route tables. Unlike interface VPC Endpoints that are available for some services, the gateway endpoints used for accessing S3 and DynamoDB services cannot be used from outside of their immediate VPC.

Below is an example of how the S3 VPC Endpoints are exposed via a VPC route table.

| Destination | Target | Status | Propagated |

| 10.0.0.0/16 | local | active | No |

| pl-1a2b3c4d (com.amazonaws.ap-southeast-2.s3, 54.231.248.0/22, 54.231.252.0/24, 52.95.128.0/21) | vpce-01abc23456de78f9g | active | No |

Multiple S3 VPC Endpoints can be created, however these will need to be attached to different route tables and subnets. This is important as it allows granular control of S3 VPC Endpoint access from different network zones within your environment. In this topology, it is implemented with the following outcomes;

- The VPC Endpoint attached to the Proxy Subnet zone allows retrieval (read-only) of objects from private or public buckets within AWS

- The VPC Endpoint attached to Private Subnet zone allows read and write access only to specific private whitelisted buckets

Figure 5. Cardinality of S3 Endpoints to Subnet

S3 VPC Endpoint Policy for Proxy Subnet Zone

The sample endpoint policy below is used to restrict S3 read-only access of the outbound proxies originating from instances deployed in the VPC.

Resources:

PrivateSubnetS3Endpoint:

Type: AWS::EC2::VPCEndpoint

Properties:

VpcId: !Ref Vpc

ServiceName: !Sub "com.amazonaws.${AWS::Region}.s3"

RouteTableIds:

- !Ref ProxySubnetRouteTable

PolicyDocument:

Version: 2012-10-17

Statement:

# Read object access to any bucket

- Effect: Allow

Principal: "*"

Action: "s3:GetObject*"

Resource:

- "arn:aws:s3:::*"Figure 6: Example endpoint policy for S3 read-only access

S3 VPC Endpoint Policy for Private Subnet Zone

The sample endpoint policy below is used to restrict access to whitelisted S3 buckets by IAM users and roles within the same AWS Organization, using aws:PrincipalOrgId global IAM condition key.

Resources:

PrivateSubnetS3Endpoint:

Type: AWS::EC2::VPCEndpoint

Properties:

VpcId: !Ref Vpc

ServiceName: !Sub "com.amazonaws.${AWS::Region}.s3"

RouteTableIds:

- !Ref PrivateSubnetRouteTable

PolicyDocument:

Version: 2012-10-17

Statement:

# Read/write object access to specific buckets in the same region

- Effect: Allow

Principal: "*"

Action: "s3:*"

Resource:

- "arn:aws:s3:::my-bucket-001/*"

- "arn:aws:s3:::my-bucket-002/*"

Condition:

StringEquals:

"aws:PrincipalOrgID":["o-xxxxxxxxxxx"]Figure 7: Example endpoint policy for S3 read-write access within the same AWS Organization

Network Access Control Lists (NACLs)

VPC network security configuration also needs to be considered if an organisation uses NACLs as an additional network control. As the S3 VPC Endpoint functions by creating routes to public IP address ranges of the regional S3 service, it is important to ensure that the NACL rules allow the appropriate traffic.

AWS public IP ranges for the S3 service can be obtained here and you can also use the AWS Tools for Windows PowerShell cmdlet “Get-AWSPublicIpAddressRange”.

Note: These IP ranges are susceptible to change and notifications are sent through SNS.

PS C:\> Get-AWSPublicIpAddressRange -ServiceKey S3 -Region ap-southeast-2 | where {$_.IpAddressFormat -eq "Ipv4"} | select IpPrefix

IpPrefix

--------

54.231.248.0/22

54.231.252.0/24

52.92.52.0/22

52.95.128.0/21A sample NACL configurations to allow use of S3 VPC Endpoint would look like:

| Rule # | Type | Protocol | Port Range | Source | Allow / Deny |

| 100 | ALL Traffic | ALL | ALL | 10.0.0.0/16 | ALLOW |

| 10000 | Custom TCP Rule | TCP (6) | 1024-65535 | 54.231.248.0/22 | ALLOW |

| 10001 | Custom TCP Rule | TCP (6) | 1024-65535 | 54.231.252.0/24 | ALLOW |

| 10002 | Custom TCP Rule | TCP (6) | 1024-65535 | 52.92.52.0/22 | ALLOW |

| 10003 | Custom TCP Rule | TCP (6) | 1024-65535 | 52.95.128.0/21 | ALLOW |

| * | ALL Traffic | ALL | ALL | 0.0.0.0/0 | DENY |

| Rule # | Type | Protocol | Port Range | Source | Allow / Deny |

| 100 | ALL Traffic | ALL | ALL | 10.0.0.0/16 | ALLOW |

| 10000 | HTTPS (443) | TCP (6) | 443 | 54.231.248.0/22 | ALLOW |

| 10001 | HTTPS (443) | TCP (6) | 443 | 54.231.252.0/24 | ALLOW |

| 10002 | HTTPS (443) | TCP (6) | 443 | 52.92.52.0/22 | ALLOW |

| 10003 | HTTPS (443) | TCP (6) | 443 | 52.95.128.0/21 | ALLOW |

| * | ALL Traffic | ALL | ALL | 0.0.0.0/0 | DENY |

Squid Proxy Domain Whitelist

In order to restrict proxy access exclusively to the S3 VPC Endpoints, the regional S3 domains have to be added to the Squid whitelist configuration. It is important to note that locking down access in this way enforces explicit assignment of the region parameter for AWS CLI or other AWS SDK based tools.

Sample Squid whitelist file

.s3.ap-southeast-2.amazonaws.com .s3.dualstack.ap-southeast-2.amazonaws.com .sts.amazonaws.com

Figure 10. Sample Squid whitelist file

In the provided example, the STS service has also been whitelisted for tools that require generation of tokens against Simple Token Service (STS). For example, the AWS Tools for Windows PowerShell may have profiles mapped to use SAML federated roles.

Testing the Solution

The following test cases demonstrate the functionality of the solution. In the first example, we attempt to write to a bucket whitelisted in the S3 VPC Endpoint policy:

export HTTPS_PROXY=http://private-proxy.c0.hosted.zone:80

aws s3 put-object --bucket whitelisted-bucket --key file.txt --body /path/to/file --region ap-southeast-2

# CLI response

{

"ETag": "\"0a1b2c3d4e5f6a7b8c9d0e1f2a3b\""

}

# /var/log/squid/access.log

1525312345.999 1679 10.x.x.x TCP_TUNNEL/200 3413 CONNECT whitelisted-bucket.s3.ap-southeast-2.amazon.aws.com:443 - HEIR_DIRECT/52.95.132.2 -In this example, we attempt to write to a bucket that is not whitelisted in the S3 VPC Endpoint policy:

export HTTPS_PROXY=http://private-proxy.c0.hosted.zone:80 aws s3 put-object --bucket non-whitelisted-bucket --key file.txt --body /path/to/file --region ap-southeast-2 # CLI response An error occurred (AccessDenied) when calling the PutObject operation: Access Denied # /var/log/squid/access.log 1525323456.999 1558 10.x.x.x TCP_TUNNEL/200 4122 CONNECT non-whitelisted-bucket.s3.ap-southeast-2.amazon.aws.com:443 - HEIR_DIRECT/52.95.134.18 -

In this example, we attempt to write to a bucket in another region:

export HTTPS_PROXY=http://private-proxy.c0.hosted.zone:80

aws s3 put-object --bucket singapore-bucket --key file.txt --body /path/to/file --region ap-southeast-2

# CLI response

HTTPSConnectionPool(host='singapore-bucket.s3.ap-southeast-1.amazon.aws.com:443', port=443): Max retries exceeded with url: /file.txt (Caused by ProxyError('Cannot connect to proxy.', error('Tunnel connection failed: 403 Forbidden',)))

# /var/log/squid/access.log

1525334567.999 119 10.x.x.x TCP_DENIED/403 4068 CONNECT singapore-bucket.s3.ap-southeast-1.amazon.aws.com:443 - HEIR_NONE/- text/htmlAnd finally, we attempt to write to a private whitelisted bucket from a public IP address:

aws s3 put-object --bucket non-whitelisted-bucket --key file.txt --body /path/to/file --region ap-southeast-2 # CLI response An error occurred (AccessDenied) when calling the PutObject operation: Access Denied # No entries in /var/log/squid/access.log given the use of public route

Implementation Considerations

When building a solution using this approach, it is important to keep in mind the following:

CloudFormation

If you are building with CloudFormation, consider using the AWS::Include Transform to maintain static mappings. For example, the public IP ranges allocated for the S3 service.

The mappings file can be stored in S3 in the following format:

PublicIPs:

ap-southeast-1:

S3: [ 52.92.56.0/22, 52.219.76.0/22, 52.219.40.0/22, 52.219.32.0/21 ]

DynamoDB: [ 52.94.11.0/24 ]

ap-southeast-2:

S3: [ 54.231.248.0/22, 54.231.252.0/24, 52.92.52.0/22, 52.95.128.0/21 ]

DynamoDB: [ 52.94.13.0/24 ]These mappings can be referenced in a CloudFormation template using the following syntax:

Mappings:

"Fn::Transform":

Name: "AWS::Include"

Parameters:

Location: "s3://MyAmazonS3BucketName/aws-services-public-ips-v1.yaml"This approach is particularly useful if deploying different network stacks across multiple accounts that need to maintain the same set of IP addresses. When using this approach, ensure the S3 bucket used for storing these boiler-plate files have cross-account access enabled.

Note: Additional automation can be added to respond to changes in these public ranges.

Extending the Solution to Other AWS Services

At the time of writing, the only other gateway-type endpoint is for the DynamoDB service, which would look almost identical in terms of implementation.

The interface endpoints can be attached to multiple subnets, are exposed as ENIs deployed directly in the subnet with private IP addresses and use security groups to whitelist allowed IP ranges. If private DNS is enabled for the interface-type endpoints, the default endpoint is used for requests. If private DNS is not used, the VPC Endpoint identifier has to be whitelisted in Squid and specified via CLI or AWS SDK using service endpoint-url parameter.

Note: Multiple interface endpoints can be attached to the same subnet, but only one can have private DNS enabled.

aws ec2 describe-instances --endpoint-url https://vpce-01abc23456de78f9g-12abccd3.ec2.ap-southeast-2.vpce.amazonaws.com/

Integrating the Solution with Data Monitoring

Data Loss Prevention (DLP) is a common consideration for enterprises who want to protect their (or their customer’s) sensitive data. The outlined solution can be integrated with active data monitoring tools over ICAP (supported in Squid 3.0 and above) to inspect and filter HTTP/HTTPS traffic based on policies defined by the enterprise.

Conclusion

As demonstrated, VPC Gateway Endpoints in combination with a well-defined proxy layer can be used to privately access AWS services such as S3. With a strong understanding of AWS networking and supporting services, it is possible to architect a highly-governed, private access path to AWS services from on-premise data centres and secure data transfers to meet compliance demands of the most regulated enterprises.

Richard Nguyen is a Consultant with Sourced Group based in Sydney, Australia. His primary focus is helping large enterprises deliver solutions that need to be secure and scalable in the cloud.